It looks like it may be time to roll over the search for narrow admissible sets to a new blog post, as we’re approaching 100 comments on the original thread.

In the meantime, an official polymath8 project has started. The wiki page is a good place to get started. Work to understand and improve the bounds in Zhang’s result on prime gaps has split into three main areas.

1) A reading seminar on Zhang’s Theorem 2.

2) A discussion on sieve theory, bridging the gap begin Zhang’s Theorem 2 and the parameter k_0 (see also the follow-up post).

3) Efforts to find narrow admissible sets of a given cardinality k_0 — the width of the narrowest set we find gives the current best bound on prime gaps.

We started on 3) in the previous blog post, and now will continue here. I’ll try to summarize the situation.

Just recently there’s been a significant improvement in , the desired cardinality of the admissible set, and we’re now looking at

. Hopefully there’s going to be a whole new round of techniques, made possible by the significantly smaller problem size.

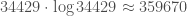

As I write this, the narrowest admissible set of size 34,429 found so far, due to Andrew Sutherland, has width 388,118.

This was found using the “greedy-greedy” algorithm. This starts with some chosen interval of integers, in this case [-185662,202456], and then sieves as follows. First discard 1 mod 2, and then 0 mod p for , for some parameter b. (I’m not actually sure of the value of this parameter in Andrew’s best set.) After that, for each prime we pick a minimally occupied residue class, and sieve that out. Assuming we picked a sufficiently wide interval to begin with, when we’re done the resulting admissible set with still have at least

elements.

More generally, there are several directions worth pursuing

1. sharpening bounds on , the maximal cardinality of an admissible set of width at most

,

2. finding new constructions of admissible sets of a given size (and also ‘almost-admissible’ sequences)

3. developing algorithms or search techniques to find narrow admissible sets, perhaps starting from a wider or smaller admissible set, or starting from an ‘almost-admissible’ set.

(If these questions carry us in different directions, there’s always more room on the internet!)

For sufficiently small sizes (at most 372), everything is completely understood due to exhaustive computer searches described at http://www.opertech.com/primes/k-tuples.html. At least for now, we need to look at much larger sizes, so obtaining bounds and finding probabilistic methods is probably the right approach.

I’m writing this on a bus, beginning 30 hours of travel. (To be followed by a short sleep then an intense 3 day conference!) So my apologies if I missed something important!

I’m curious about how small k_0 would have to be before it’s possible to do exhaustive searches. Here’s what I’d try. We’re going to try searching the space of all residue classes for primes up to , thought of as a tree. That is, we’ll chose a residue class for 2, and having made that choice one for 3, and so on.

, thought of as a tree. That is, we’ll chose a residue class for 2, and having made that choice one for 3, and so on.

We are then going to sieve out these residue classes, from the interval [0, W], where W is the best known width for the desired cardinality.

Of course, this tree is ridiculously large! (Somewhere around 10^250 for the current k_0.) But two things help significantly. Sometimes, especially for large primes, there are multiple empty residue classes, and so these branches of the tree “glom together”. Second, as soon as the number of elements remaining drops below k_0, we can prune off the entire branch if the tree below. It’s possible that starting with an already good W this will drastically decrease the search space.

I’d be really interested in knowing the running time of such a search! There’s a nice trick for estimating this, by doing random descents down the tree, described beautifully by Knuth in “Estimating the efficiency of backtrack programs” ftp://reports.stanford.edu/pub/cstr/reports/cs/tr/74/442/CS-TR-74-442.pdf

It might also possible to do hybrid searches, which are not exhaustive, starting with a greedy algorithm and switching over at some point to this tree search. In the greedy-greedy algorithm we take advantage of “knowing” that 1 mod 2 and 0 mod p for small p are best, and I’m not sure how to incorporate this idea.

If you could post a link on the wiki page to this new thread it would be good (I don’t have editing privileges).

Will do. in fact I think anyone, as long as they’ve confirmed an email address, can edit the wiki.

I just added a link to the wiki.

Regarding exact computations, Section 4 of the Gordon-Rodemich paper http://www.ccrwest.org/gordon/ants.pdf is on “computing efficiently”. There is also the paper of Clark and Jarvis http://www.ams.org/journals/mcom/2001-70-236/S0025-5718-01-01348-5/S0025-5718-01-01348-5.pdf which computes

efficiently”. There is also the paper of Clark and Jarvis http://www.ams.org/journals/mcom/2001-70-236/S0025-5718-01-01348-5/S0025-5718-01-01348-5.pdf which computes  up to

up to  exactly, extending the Engelsma data. I haven’t looked at these two papers in detail though. (

exactly, extending the Engelsma data. I haven’t looked at these two papers in detail though. ( is the largest size of admissible tuple one can fit inside an interval of length x.)

is the largest size of admissible tuple one can fit inside an interval of length x.)

@Scott: the parameter is the square root of the width of the interval.

is the square root of the width of the interval.

Thanks (just a comment to subscribe to comments here).

I have the impression that Drew’s greedy-greedy algorithm (post #77 of the previous thread) seems to perform slightly better when one maximizes a in [0, p-1] in the case of ties, rather than minimizing it.

Applying this on input [-180584, 207398] yields an admissible 34429-tuple with the same end points, hence of diameter 387982.

A simple C implementation of the “greedy-greedy” algorithm described above is available at

http://math.mit.edu/~drew/admissable_v0.1.tar

I have to admit I’m having trouble parsing the algorithm in the Clark and Jarvis paper (In particular Step 3 has two ifs and one otherwise…) But it does look like it has the right ingredients, and presumably takes advantage of both the “glomming” and “pruning” in my comment above.

(small improvement over #6: input [-180568,207406] yields diameter 387974)

Thanks for sharing the code and the output Andrew! It helped me uncover bug in my code (https://github.com/vit-tucek/admissible_sets) I tried to compile and run your program but it didn’t reproduce the output claiming that it did not find admissible set of appropriate size. For larger intervals it segfaulted. I was curious about running time. My implementation of your algorhitm takes around 25 seconds on decent processor and it is pretty high level code so one can experiment easily with different designs. Also the plotting capabilities of matplotlib can maybe help to see what is going on…

I updated the source code listing at

http://math.mit.edu/~drew/admissable_v0.1.tar

to fix a bug that caused a segfault on some inputs (this bug did not affect the correctness of the output).

I also added an optional argument to change how ties are broken, per Wouter’s suggestion (I note that it isn’t always better to break ties in one direction or the other, it’s worth trying both). To reproduce Wouter’s new record type “gg -180568 207406 1”.

@Vit: It takes about 2 seconds (single threaded) on my machine. This can easily be improved to well under 1 second with various optimizations that I took out of the version I posted in order to make it easier for people to follow (these optimizations mattered a lot more when was still 361,460).

was still 361,460).

In the spirit of making a tiny improvement just for the sake of it, I obtained a diameter of 387960 by doing the following. I used the interval [-180568, 207392] and I followed Wouter’s suggestion of maximizing a in [0, p-1] in the case of ties, rather than minimizing it, except for p=1567 in which case I chose a=0 rather than 1202.

I’m fairly sure that this record will be broken soon. But at least I learned a little bit programming.

Using the interval [-205892,182018] and breaking ties upward yields an admissable sequence with diameter 387,910. To reproduce with the program posted above, use “gg -205892 182018 1”.

The sequence can be found at:

http://math.mit.edu/~drew/admissable_34429_387910.txt

Hi!

I just found about about this interesting project and I am still reading up on what you have done.

To test my understanding a bit I would like to ask why this is not a useful admissible set at this point?

http://abel.math.umu.se/~klasm/test-admiss-1.txt

Using the interval [-205892, 182012] and breaking ties upward except for the prime p=1787 yields an admissible sequence with diameter 387904.

@Klas: your set is admissible, but it consists of 1824 elements only. At this moment, we are looking for sets of size 34429.

Here are some further suggestions for the experts in programming:

(Andrew, many thanks for checking my last one, it wasn’t to be…):

On the question about the best class to choose:

to choose:

1) a priori I don’t see any argument in favour of anything deterministic like “min” or “max”. One could try to choose a random one from the set of ‘s defining the tie.

‘s defining the tie.

As this increases the search space, maybe one gets some small improvement.

2) After sieving modulo the primes (say up to the squareroot of interval size):

maybe one should *not* sieve further residue classes but just remove individual integers:

One identifies the set of primes, modulo which the set is not admissible (say bad primes).

identifies the residue classes with least frequency,

but then goes back to the corresponding integers before reduction mod p. Let’s call these bad integers mod p.

Do this modulo several primes (say for simplicity).

for simplicity). ) which are bad modulo

) which are bad modulo  and

and  . Say

. Say  and

and  .

.

Then see if there are integers (say

One can try to remove this “very bad” first.

first. and

and  would have been a waste, as it just intersects in

would have been a waste, as it just intersects in  .

.

Removing the classes

More generally, for those integers surviving the first sieving modulo small primes attach a weight, depending on how bad they behave modulo the bad primes, and remove the very bad integers first.

Comment:

As the product of any two (or more) bad primes is large,

this would seem useless when removing the class 0 mod p.

But at this sieve step we remove other classes as well.

Regarding Christian’s suggestion 1 in post 16, for whatever reason I seem to get better results if I randomly pick either the min or max tied residue class rather than randomizing over all of them.

In any case, here is a sequence with diameter 387814 obtained using the interval [-205796,182018] with the greedy-greedy algorithm and randomly choosing either the min or max residue class when breaking ties:

http://math.mit.edu/~drew/admissable_34429_387814.txt

A slight further improvement on the post above, using the interval [-205754,182012] with diameter 387,766.

http://math.mit.edu/~drew/admissable_34429_387766.txt

It might be worth redoing the older sieves (Zhang’s sieve and the Hensley-Richards sieve

and the Hensley-Richards sieve  , or shifted Hensley-Richards ) for the latest value

, or shifted Hensley-Richards ) for the latest value  as a benchmark for the most recent improvements. (For instance, by comparing Hensley-Richards with shifted Hensley-Richards one can get some estimation as to the amount of savings that shifting the interval would be expected to provide.)

as a benchmark for the most recent improvements. (For instance, by comparing Hensley-Richards with shifted Hensley-Richards one can get some estimation as to the amount of savings that shifting the interval would be expected to provide.)

In the other direction, the Montgomery-Vaughan large sieve inequality http://www.ams.org/mathscinet-getitem?mr=374060 gives which gives a lower bound of

which gives a lower bound of  without any further improvements in

without any further improvements in  . (This lower bound is unlikely be sharp though.)

. (This lower bound is unlikely be sharp though.)

Given an admissible set, one can try to make it shorter by replacing the endpoints.

For instance, I applied the following to Drew’s interval #18. Let max be the maximal entry of this interval. Then factorize max – j*30030 for j = 1, 2, … until one finds a power of 2 times a large prime, and replace max by max – j*30030.

The factor 30030 = 2*3*5*7*11*13 ensures that we are okay modulo the first few primes. The large prime factor gives us a reasonable chance of surviving, as most of the time we sieved 0 mod p.

Doing this 17 consecutive times results in an admissible set of 34429 elements, of diameter 387570. When one does it 18 times, the result is no longer admissible. If one proceeds by doing the similar thing for the minimum (4 times; 5 times spoiled admissibility) one ends up with an admissible set of diameter 387540. There’s definitely much room for improvement here.

(While typing this I realize that there is no big point in using 30030, in the end.)

While this looks like it may have been superseded by Wouter’s recent improvement, I’ll post this sequence of diameter 387,754 just in case its useful as in input to further optimization

http://math.mit.edu/~drew/admissable_34429_387754.txt

@terry: The optimal Zhang sequence for k0=34429 appears to use m=386 and has diameter 411,932. I can take a look at the other cases this afternoon.

I withdraw my above claim (#21). My resulting set contained duplicates (I thought the Magma function I used took care of that, but apparently it didn’t). Thanks to Drew for pointing this out.

What is the relationship between the diameter and Zhang’s bound? In particular, what would in-principle reaching that H=211,047 imply with k0 fixed? In other words, what is the new bound if your sub-project succeeds and the others fail?

@Andrew: Thanks for working it out! So the more advanced sieving methods are shaving about 6% over the Zhang sieve – a relatively modest savings, but every little bit helps :).

@Daniel: H is the bound on prime gaps, so if we theoretically found an admissible 34,429-tuple of diameter 211,047 (which I doubt), this would imply that there are infinitely many pairs of primes of distance at most 211,047 apart.

Incidentally, the value is likely to be stable for a few days; the process of converting a value of

is likely to be stable for a few days; the process of converting a value of  to a value of

to a value of  is more or less completely optimised now as far as I can tell, and it will take several days more for enough of us to read through the second half of Zhang’s paper to get a confirmed value of

is more or less completely optimised now as far as I can tell, and it will take several days more for enough of us to read through the second half of Zhang’s paper to get a confirmed value of  (though, informally, we’re beginning to suspect that values on the order of 1/300 or so might be achievable, up from the current value of 1/1168, which should improve

(though, informally, we’re beginning to suspect that values on the order of 1/300 or so might be achievable, up from the current value of 1/1168, which should improve  by a factor of maybe sixfold or so, but this is all completely unofficial at this point).

by a factor of maybe sixfold or so, but this is all completely unofficial at this point).

Just to share what I noted to Wouter offline, for most of the sequences I have posted above, it is not possible to improve them by replacing an endpoint with an interior point. Taking the most recently posted sequence with diameter 387754 as an example, there are 790 primes (including all primes less than 4679) for which all but 1 residue class is occupied by elements of the sequence that are not endpoints. If you sieve the interval of just these unoccupied residue classes, there are no survivors that are not already elements of the sequence.

The one exception is the sequence with diameter 387910, the integer 87254 which is not currently in the sequence could be added, and removing the last entry 182018 then reduces the the diameter by 6 (incidentally, I expect this is exactly what happened with the optimization in post 14 which reduced the diameter to 387904).

Up through comment #16 on this post I’ve been able to reproduce the results here with my own code, but with the introduction of the randomization step in #17, I’ve gotten stuck; Drew, if you wouldn’t mind answering a couple of questions about what you’re doing, I’d be appreciative.

(1) When you produced the admissible set in post #17 (to fix an example), did you begin with a guess of the interval [-205796,182018]? Or did you begin with a slightly wider interval (if so, how much wider?), sieve, and then pick the narrowest 34429 elements of the ones that remained? (Unless I’m blundering somewhere, the wider a starting interval I choose the wider the output of the greedy-greedy algorithm seems to be, so there seems to be some art here.)

(2) Given the randomization step, how many times (to an order of magnitude) did you have to run the randomized procedure until you hit the particular admissible set that you found?

With the Montgomery-Vaughan large sieve inequality I only seem to be getting instead of Terry’s

instead of Terry’s  . Sage tells me that in this range sieving up to

. Sage tells me that in this range sieving up to  is optimal, and then the inequality

is optimal, and then the inequality  yields

yields

.

.

Regarding endpoint issues, one very small step one could take here comes when one has to break ties among residue classes that kill off the same number of survivors; perhaps residue classes that kill off a survivor nearer to (say) the right endpoint are to be favored than ones which stay away from that endpoint, in order to hopefully capture some gain from shifting the interval to the left. (This may also have the side benefit of effectively randomising one’s choice in a way which is reproducible, as per comment #27.) I don’t know how effective this sort of ordering will be though.

Here is the Sage code I used:

k=Integer(34429)

Q=Integer(131)

S=sum([(moebius(q)^2/euler_phi(q)).n(digits=10) for q in [1..Q]])

print k*S-Q^2

@Gergely: there are two large sieve inequalities in Montgomery-Vaughan. The first, easier inequality ((1.4) in their paper) gives the bound you write, but there is a more difficult inequality (1.6) which they use to show that

((1.12) in their paper, or the k=1 case of (1.10)). This together with the prime tuples conjecture already tells us that admissible sets in an interval of length N can have size at most . But the Montgomery-Vaughan argument also works in our setting without prime tuples. From Corollary 1 of that paper we see that we have the inequality

. But the Montgomery-Vaughan argument also works in our setting without prime tuples. From Corollary 1 of that paper we see that we have the inequality

for any . Montgomery and Vaughan pick

. Montgomery and Vaughan pick  and use some standard estimates to eventually bound the RHS by

and use some standard estimates to eventually bound the RHS by  (actually they have a bit of room to spare since they also absorb a

(actually they have a bit of room to spare since they also absorb a  term which we don’t need here). But with the values of

term which we don’t need here). But with the values of  we have here we could perhaps perform the optimisation of

we have here we could perhaps perform the optimisation of  numerically and improve upon 211,047.

numerically and improve upon 211,047.

There is a minor typo in post 22, I should have written m=836, not m=386.

@pedant: Yes, the randomness makes reproducibility a pain, which is one of the reasons I have been posting the sequences (in theory of course the computations are reproducible in the sense that I can give you a program that is guaranteed to *eventually* cough up the sequence, but you might have to be very patient). Regarding your specific questions:

(1) I generally start with an interval that is already about as tight as the best diameter I already know (or even slightly tighter). One advantage of doing this is that it allows you to do an early abort as soon as the number of unsieved integers falls below k_0, and this speeds things up substantially (one can then try again with a slightly shifted interval, effectively covering a larger interval that you could have started with in the first place).

(2) For the sequence in post 17, something on the order of 10^4 iterations. For the sequence in post 22, more like 10^5 or 10^6.

The M-V paper by the way is at http://journals.cambridge.org/download.php?file=%2FMTK%2FMTK20_02%2FS0025579300004708a.pdf&code=aa9418bce94e63604579326df0a7aa58 . And a typo: N should be H throughout to be consistent with our notation rather than M-V notation.

Actually, to be _really_ pedantic, MV’s N is actually H+1 in our notation, rather than H, but I’m sure we can ignore this technicality for now :)

Just a thought that it might be a good idea to publish this problem as a programming puzzle, for example at IBM’s monthly “Ponder This” challenge (http://domino.research.ibm.com/Comm/wwwr_ponder.nsf/pages/index.html). This would open it up to many talented programmers and more computing resources. I sent them a note describing a potential programming challenge finding dense admissable sets, but I’m sure there are other similar forums which might be effective.

I have been doing some experiments with this during the afternoon, trying different ways of constructing admissible sets ( now of the right size too). None of my attempts have managed to improve the currents records but I have noted that it seems to be quite easy to construct sets of the right size and diameters varying between 400 000 and 450 000. More or less every not obviously bad construction method I have tried finds something in that range.

All the methods I have tried are of the form: start with an interval, for each prime delete all remaining numbers in one modulo class, where the modulo class was chosen in different ways.

One robust choice seems to be to pick the modulo class of the largest remaining number in the interval.

Picking the least common modulo class, which might be empty, also works quite well, but produces sets with larger diameter.

These are of course rather crude methods, but I found it interesting that even they don’t land too far from the record holding sets.

@Drew: Thanks! Your answer to (2) explains everything, as my code is an order of magnitude slower than yours and so I haven’t run nearly as many iterations as you have. (-:

(Also, yes, I’ve doing that same abort that you’re doing when the number of unsieved integers falls below k_0.)

For comparison, the Hensley-Richards sieve with

with

with

starting at

starting at  to

to  has diameter

has diameter  .

.

@Terry: Thanks for your valuable comments. The improved inequality yields . More precisely,

. More precisely,  and

and  contradicts

contradicts  .

.

@Bryan: This might be a good idea once the polymath8 project plateaus (in particular, once we get consensus on an optimised value of and hence

and hence  ). There is the possibility that

). There is the possibility that  could drop by as much as one further order of magnitude in the weeks to come, which could open up a lot more optimisations than what we are doing right now. By that point we may have some optimised algorithm which may for instance be parallelisable and which could indeed be very amenable to these sorts of challenges.

could drop by as much as one further order of magnitude in the weeks to come, which could open up a lot more optimisations than what we are doing right now. By that point we may have some optimised algorithm which may for instance be parallelisable and which could indeed be very amenable to these sorts of challenges.

To finish of the answer to Terry’s question from post 19, as noted in post 22 the optimal Zhang sequence for k0=34429 appears to have m=836 and diameter 411,932. The optimal Hensley-Richards sequence appears to have m=876 and diameter 402790, and the optimal asymmetric Hensley-Richards sequence appears to have m=811, i=21204 and diameter 401,700.

This suggests to me that there might be a bit more to be gained by shifting the interval around, but not a whole lot.

@Gergely: Thanks! I’ve added a new section on “Benchmarks” on the wiki to record this (and the computations of Andrew and Christian on older sieves). If I read correctly, your calculation did not incorporate a shift by 1 that I noted in #34 (because the set [M+1,M+N] actually has diameter H=N-1 rather than N) so actually the lower bound should be .

.

@Terry: Indeed, I took MV’s for our

for our  . So my bound is really

. So my bound is really  .

.

Er, yes, you’re right, I somehow managed to subtract one off twice. :)

@Bryan: It occurred to me that this problem is a natural for one of Al Zimmerman’s programming contests (or something comparable). But I agree with Terry that it makes sense to wait a while. Also, many of these programming contests are the antithesis of the polymath approach — contestants are usually very secretive about their algorithms and the progress they are making.

@Terry: In the MV bound one can improve to

to  , see Brüdern: Einführung in die analytische Zahlentheorie, page 162. Probably this is in the original paper, too. This buys us

, see Brüdern: Einführung in die analytische Zahlentheorie, page 162. Probably this is in the original paper, too. This buys us  .

.

@Terry

Ok, makes sense to hold off until we nail down k0 further.

@Andrew

Sure some people are secretive but I think just as many work in teams and share work. I guess it depends on the contest / incentives.

@Gergely,

Montgomery (The analytic principle of the large sieve, page 557)

http://projecteuclid.org/DPubS?service=UI&version=1.0&verb=Display&handle=euclid.bams/1183540922

writes that, according to an unpublished note of Selberg 3pi/2 can be replaced by 3.2. (this would give a larger improvement than

sqrt(22).

(The comment is repeated e.g. here:

A note on the weighted Hilbert’s inequality

XJ Li – Proceedings of the American Mathematical Society, 2005.

But I did not follow the history on this constant).

@Andrew and other programmers:

1) I am curious if s=sqrt(interval-length) in Andrews’s program for sieving the small primes is strictly best possible. Of course, there may be a reason (divisor < sqrt) of Eratosthenes sieve type.

2) Also: As you said that the min or max ist best for choosing the class a, (when the class of minimum frequency gives a tie) one could consider looking at classes that have very low (but maybe not minimum frequency), as long as the class is small.

(We have two principles, like frequency and class close to 0 mod p, but maybe we should weigh these principles slightly differently)

A small comment: now that we have the benchmarks, it is perhaps worth experimenting with different techniques than the current champion technique (greedy-greedy on shifted intervals with randomised breaking of ties); if a new method beats, say, the Zhang benchmark of 411,932 then it could be worth pushing further even if it doesn’t directly beat the current world record. (Scott mentioned a while back that he had some experiments with simulated annealing, I would be curious to see if that developed any further.)

@Christian: To your question (1), I have the impression that the method is not very sensitive to the choice of what you’ve called s, in the sense that once you’ve sieved out far enough, 0 appears to be the unique minimum-frequency residue class for a rather wide band around sqrt(interval-length).

@Christian: Thank you! It is strange that no more work has been done on this constant (or perhaps the problem is hard). At any rate, Selberg’s provisional in place of

in place of  yields

yields  , while the conjectured value

, while the conjectured value  would give

would give  .

.

I think the record claimed in comment 14 is missing in the wiki.

Added, thanks! (though in principle the wiki is free to edit by anyone, after a registration which was implemented due to spam issues).

@charles: I concur with pedant (post 51) regarding (1). Regarding (2), I think the effect is likely to be very small. I have played around with a few weighting ideas to break ties (including incorporating Terry’s suggestion to also consider distance to the end points), but none seems to work as well as randomness. It’s not that any particular random sequence is likely to be better, it’s just that you can repeat them ad infinitum on the same interval (compared to the exponential number of random tie-breaking choices, the total number of intervals worth trying is very small).

I experimented with several different approaches today, but the thing that has proven most effective is just optimizing the code to allow more random attempts in less time. I also ran enough tests to convince myself that, as Wouter suggested, while picking the largest residue class in [0,p-1] to break ties is not always better than picking the smallest, it is better more often than not, so I changed the probability used in random tie breaking to favor the largest residue class about 80% of the time.

The net of all this is an admissable sequence of length 34429 with diameter 387,620.

http://math.mit.edu/~drew/admissable_34429_387620.txt

This recently released paper might be of use for considering optimising the sieving.

http://arxiv.org/abs/1306.1497

A note on bounded gaps between primes

Janos Pintz

As a refinement of the celebrated recent work of Yitang Zhang we show that any admissible k-tuple of integers contains at least two primes and almost primes in each component infinitely often if k is at least 60000. This implies that there are infinitely many gaps between consecutive primes of size at most 768534, consequently less than 770000.

For what it’s worth, I’ve plotted Engelsma’s values of the minimal diameter of an admissible

of an admissible  -tuple for

-tuple for  in a graph, just to get an idea of where this might be going. The blue line corresponds to Engelsma’s findings. The red line is

in a graph, just to get an idea of where this might be going. The blue line corresponds to Engelsma’s findings. The red line is  , which (at least in this range) is systematically dominated by the blue line. I’ve also plotted the difference (green line), which looks linear (with best fitting slope 0.9788) although Drew’s record suggests that instead it bends down slightly.

, which (at least in this range) is systematically dominated by the blue line. I’ve also plotted the difference (green line), which looks linear (with best fitting slope 0.9788) although Drew’s record suggests that instead it bends down slightly.

(Are there any finer heuristics known that would explain what this green line is?)

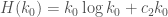

Extrapolating blindly to , one finds that $H(k_0)$ should be larger than

, one finds that $H(k_0)$ should be larger than  , and that it should be “near”

, and that it should be “near”  . So this suggests that 387620 is already in the right range.

. So this suggests that 387620 is already in the right range.

Again: for what these speculations are worth…

I have provisionally computed a value of from Pintz’s new preprint, but this should be viewed as unconfirmed at present. Also, there is room for further optimisation here.

from Pintz’s new preprint, but this should be viewed as unconfirmed at present. Also, there is room for further optimisation here.

@Wouter; unfortunately extrapolation is a bit dangerous: see Fig 2 of http://www.ccrwest.org/gordon/ants.pdf which compares the Schinzel sieve against the prime counting function (the horizontal axis here is roughly H, the vertical axis is roughly ).

).

Regarding extrapolations (comment 58): admits

admits

integers, which might translate to:

integers, which might translate to:  .

.

Based on the Gordon and Rodemich paper, and on our progress, my intuitive feeling is that so far the results are of type:

an interval of size

For comparison, the number of primes (as say in Zhang’s sieve) gives , i.e. has

, i.e. has  .

.

For fixed size reworking any bound/method into these constants

reworking any bound/method into these constants  could be some kind of measure.

could be some kind of measure.

(Asymptotically, that is for increasing or increasing interval size, this is of course not clear: Gordon and Rodemich describe heuristics which says that maybe $c_1$ increases very slowly (

or increasing interval size, this is of course not clear: Gordon and Rodemich describe heuristics which says that maybe $c_1$ increases very slowly ( . Also, to analyse the greedy-greedy and randomization asymptotically could be an interesting question…)

. Also, to analyse the greedy-greedy and randomization asymptotically could be an interesting question…)

addition, as some formule do not parse: is k_0 log k_0 + c_2 k_k…

is k_0 log k_0 + c_2 k_k…

…which might translate to

For comparison, the number of primes in an interval of size is about integrallogarithm of H, which is asymptotically

is about integrallogarithm of H, which is asymptotically

H/log H+ H/(log H)^2+ error term, i.e. has c_1=1.

I found an admissible set of cardinality 11123 and diameter 113520, see http://maths-people.anu.edu.au/~angeltveit/admissible_11123_113520.txt

If Terry is right that k_0 can be taken to be 11123 that yields an improvement of approximately a factor of 3.

How about if we try as v08ltu claims here: http://terrytao.wordpress.com/2013/06/03/the-prime-tuples-conjecture-sieve-theory-and-the-work-of-goldston-pintz-yildirim-motohashi-pintz-and-zhang/#comment-233178 ?

as v08ltu claims here: http://terrytao.wordpress.com/2013/06/03/the-prime-tuples-conjecture-sieve-theory-and-the-work-of-goldston-pintz-yildirim-motohashi-pintz-and-zhang/#comment-233178 ?

Unfortunately I won’t have time to write anything about simulated annealing for a few days; I’m heading off to a conference in a few hours and not taking a computer. Back before the greedy-greedy algorithm, when we were looking at Henley-Richards and shifted Henley-Richards sequences, I managed to (privately :-) beat some of the records using a simulated annealing process.

The idea was just to find a value of m that gave an admissible Henley-Richards sequence, and then reduce this a bit to start with an “almost admissible” sequence. Then I’d do a random walk, taking an element that fell into some minimally occupied residue class for some obstructed prime, and replacing it with something randomly chosen in the interval. However, before taking each step of the random walk, I’d check if it improved the “inadmissibility” of the sequence (which I took to be the sum of the sizes of the minimally occupied residue classes; so admissibility is the same as inadmissibility 0). If it did improve, I’d accept the step. If it didn’t improve, I’d accept it with some low probability.

(To really deserve the name simulated annealing you should let this probability decay to zero as you progress, but if you expect you’re unlikely to reach the global minimum, it’s not too important.)

Now, back then the bounds still had a lot of slack relative to current methods, so I have no idea if this is still worth pursuing. Someone should feel free to try some variation of this if they like; otherwise on Monday I’ll look again.

With k_0=10721 I found an admissible sequence of diameter 109314: http://maths-people.anu.edu.au/~angeltveit/admissible_10721_109314.txt

This can probably be improved quite easily, I didn’t search for very long. Some of the credit should go to Andrew Sutherland, I’m using a modified version of his c program from post 10.

Two new admissable sequences:

For k0=60000, with diameter 707,328

http://math.mit.edu/~drew/admissable_60000_707328.txt

For k0=10721 with diameter 108,990

http://math.mit.edu/~drew/admissable_10721_108990.txt

I also have sequence for k0=11018 and k0=11123 that I will post shortly.

For k0=11123 with diameter 113462:

http://math.mit.edu/~drew/admissable_11123_113462.txt

For k0=11018 with diameter 112302:

http://math.mit.edu/~drew/admissable_11018_112302.txt

Slight improvement to the last sequence above for k0=11018 now with diameter 112,272:

http://math.mit.edu/~drew/admissable_11018_112272.txt

I notice that it looks like k0 is now down to 10719. So far the best I can do for this is to just trim the last two entries off the diameter 108,990 sequence of length 10719. This yields an admissable sequence of diameter 108978 that I have for reference posted here:

http://math.mit.edu/~drew/admissable_10719_108978.txt

I also have some minor improvements to a few of the sequences posted for larger k0 values above, but I won’t bother posting them unless someone asks for them.

In terms of some alternative optimization algorithms besides the randomized greedy algorithm used to obtain all the sequences I posted above, I thought I’d mention a couple of ideas I have been playing with. They are all likely to be much more time-consuming than the randomized greedy approach, but as k0 gets smaller, this is less of an issue.

1) Yesterday I actually tried an approach that builds an admissable sequence within a given interval by adding integers one-by-one in a way that preserves admissability, rather than sieving integers out of the interval. The idea is to start with a small initial set of integers, say of size sqrt(k0) (currently I do this by sieving out 0 mod small primes and picking a dense subset) and to then assign a weight to every other integer in the interval based on how many *new* residue classes you would hit if you added the integer to the set (inversely weighted by the size of the prime). Pick the integer with the least weight, recompute all the weights, and repeat.

This proved to be very slow and didn’t produce inspiring results. But that doesn’t mean the idea can’t be made to work.

2) As a variation on the randomized greedy approach, today I want to look at implementing an intelligent look-ahead strategy when picking the residue class to sieve (e.g. compute the number of integers lost for various choices of residue classes for each of the next n primes, for some small n (maybe 3 or 4).

I plan to give this a try today.

3) Another approach would be to try to build large narrow admissable sets from smaller ones. Suppose someone gives you an min-diameter admissable set of size k0/2, can you use this to get a small diameter admissable set of size k0?

4) Regarding Scott’s comments on simulated annealing (post 63), I have had good results with simmulated annealing on other number-theoretic optimization problems (see http://ants9.org/slides/poster_caday.pdf, for example). I’m not sure how well this one fits a simmulated annealing model, but a simple version would be to modify the randomized greedy approach to initially be more flexible about its random choices (don’t always choose a residue class that kills a minimal number of integers), then gradually become less flexible as the temperature parameter goes down.

5) Finally, it might also be worth thinking about a branch-and-bound approach, but I haven’t really thought this through to any degree. We might need k0 to be quite a bit smaller before this becomes practical.

Interestingly, for k0=10719 (and smaller k0 in general, I suspect) one does better with an interval that does not span 0. Using the interval [7858,116492] I get an admissable sequence of diameter 108634.

http://math.mit.edu/~drew/admissable_10719_108634.txt

layment question: how hard is it to pinpoint a number?

Here’s a thing one can try to enhance a given admissible set S. One looks for a reasonably nice admissible set T in the same region, takes the union S U T, and repeatedly applies the greedy-greedy algorithm starting from that union (with fully randomized tie breaks, i.e. not only min vs. max). As soon as the result is more narrow than T, replace T by that result and continue. In doing so one can hope to benefit from the “right” sieving choices that were made in the construction of S (by testing them against T), and to improve the bad ones.

All of this is a bit vague of course… In any case, I applied this to Drew’s record #69, peeling off a modest 2.

108632.txt

Probably the gain will always be very small, but maybe this trick can be improved?

I found a slightly better sequence for k0=10719 with diameter 108600.

http://math.mit.edu/~drew/admissable_10719_108600.txt

Wouter, perhaps you can try your trick again with this one?

Ok, I just did. Now the gain is a little more (but still not a lot):

108570.txt

Ok, so by running a new version of my algorithm (which also looks for post-optimizations around the end points) I was able to improve the diameter of your sequence to 108556

http://math.mit.edu/~drew/admissable_10719_108556.txt

Can you further improve this sequence?

Hi, Unfortunately, I found an error in Pintz, and it checked out with what I got from a Ben Green post (though neither has confirmed). See Terry Tao’s blog comments. If this is indeed the case, the new constraint is $1/4 \le 207\omega + 43\delta$. Via optimization, I get $\delta = 1/942$ allows $k_0=25111$ the latter best possible. Sorry…

http://terrytao.wordpress.com/2013/06/03/the-prime-tuples-conjecture-sieve-theory-and-the-work-of-goldston-pintz-yildirim-motohashi-pintz-and-zhang/#comment-233310

For benchmarking purposes let me just note that Schinzel sieve didn’t go under 113000 and Erasthothenes, while sligthly better, basically remained at the same level (that is for k=11,018).

So far pure greedy works best for me although it still lacks behind current records.

I’ve hacked together Andrew’s idea 2 but Python is painfully slow and I’m still waiting for the results.

@Drew: No, at least not immediately. I’ll let it run for some longer time.

But it’s not unlikely that the point of saturation has already been reached here. Note that, when compared to your 108600 sequence, the whole lot has been shifted to the right a bit. I guess that’s what this trick is capable of doing: once you are very near a “hotspot”, it can push the sequence towards it. Once you’re on it, it won’t help any more.

Anyway, according to v08ltu’s post #75, we’re back to !

!

Just found a solution based on the two solutions in #74 and #72:

http://www.cs.cmu.edu/~xfxie/project/admissible/admissable_10719_108550.txt

I want to leave a quick remark about something that as far as I’ve been able to tell doesn’t help, in case it gives someone else a better idea. One might imagine that (after the initial sieve of primes less than the square root of the interval length) there’s a better order in which to sieve than simply in increasing order of the remaining primes. [Well, perhaps that’s unimaginable to an analytic number theorist; I am not one!]

For instance, rather than sieving out one of the minimally-occupied residue classes for the smallest currently-obstructed prime, I wondered whether it might be better to sieve a minimally-occupied residue class at a prime p for which the ratio (multiplicity of the least-obstructed residue class)/(average multiplicity of a residue class) was minimized (or in other words for which p*(multiplicity of least-occupied residue classes) was minimized). However, in my experiments it looks to me like this does a little bit worse than just sieving the primes in order.

For finding the solution in #78, I have built a meta-heuristic algorithm. The basic idea is to take an existing solution as an incumbent solution, and then using elements in other solutions as reference elements for further improving the incumbent solution. But I found some some heuristics (e.g., adding one, adding two and removing one) do not work. So the final version an GreedyALL version: i.e., first adding all external elements, and remove some of them in a greedy way by minimizing the conflicting cost to 0.

I found this problem is kindly similar to find an independent set in graph coloring, so I added some basic tricks to reduce the computational time of local operations.

BTW: In #78, the incumbent solution is from #74, and the reference elements are from #72.

I will post the code (in JAVA) after clearing the code.

Is the following true?

————————————————–

Primes are uniformly distributed

Let p, r, n are positive integers with p>1.

U(p, r, n) denotes the number of primes less than n that are equal to r (mod p).

For any prime p and pair of integers r1, r2 between 1 and p-1, we have:

The ratio U(p,r1,n) / U(p,r2,n) has limit 1 as n goes to infinity.

Here’s a sequence to get the ball rolling with k0=25,111, with diameter 275,424. I’m sure it can be improved:

http://math.mit.edu/~drew/admissable_25111_275424.txt

@xfxie: I’ll be interested to see the details. It looks like both you and Wouter could benefit from having a number of “good” sequences that you can then try to improve. I’ll try to find a couple of different ones.

I was able to further improve the sequence from #78 for k0=10719, bringing the diameter down to 108,540. This k0 may no longer be relevant, but I thought I would post it as a matter of interest. We seem to be able to profitably iterate different optimization techniques.

http://math.mit.edu/~drew/admissable_10719_108540.txt

@pedant: Regarding post 79, I also tried varying the order of the primes, but like you, I have so far not found it to be beneficial.

Here is another sequence for k0=25111 that’s in different area and slightly better, with diameter 275,418.

http://math.mit.edu/~drew/admissable_25111_275418.txt

Another one :

For any number e > 1, there exists a number N, such that for any M > N, there is at least one prime between M and e x M.

Oops, I realize I should have posted this sequence, which is essentially the same but slightly narrower:

http://math.mit.edu/~drew/admissable_25111_275404.txt

Using the merging trick, sequence #87 can be brought down to 275,292. Can your endpoint optimizations narrow this further?

25111_275292.txt

(@ZTOA, check Bertrand’s postulate)

@Wouter: I think I’ve got it down to 275266 using your merging trick.

oh, excellent! (and even to 275264 I noticed)

Just got a solution 275388 based on #87 ( the reference elements are from another solution generated by Andrew’s software).

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_25111_275388.txt

I just checked #88 (using Andrew’s software). It is admissible, but k0=25013. Maybe I am wrong, because I have to pre-process the data since the format is different.

Starting from your sequence, my computer then found an admissible sequence of diameter 275126. Probably that’s not the end of it.

@andrew: I just saw #82. Yes, the current realization assumes the incumbent state is a good solution, but the reference elements can come from a “plain” solution (or multiple of them).

@xfxie, our messages crossed. By “starting from your sequence” I meant the one of pedant.

It would be interesting if you could apply your machinery to the above sequences. I think they consist of the right number of elements, so I would guess that something goes wrong with pre-processing the data. But I’ll double-check it tomorrow (I’ll call it a night here). If you wish I can then give them in Andrew’s format.

@Wonter: I just checked 25111_275248.txt on the dropbox, it is correct. Sorry for the mistake since I pre-processed the data by hand.

@Wouter: That’s great! (but I think the link to your 275126 sequence is broken?) I think I’ve got my code set now so that it can run without my interference (i.e. it can re-start itself if it gets stuck), so I’ll let it run through the night. I’m down to 275214 now, myself; same folder as in #89.

Let’s hope there’s no horrible bug whereby the program puts all sorts of incorrect nonsense in the dropbox folder while I’m asleep. (:

@Wonter: Based on your comment, I run the software on your solution 25111_275214.txt, and obtained 275208.

The solution 275208 is located at:

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_25111_275208.txt

BTW: Maybe 25111_275214.txt is from #97 @pedant, I just downloaded from the dropbox.

@xfxie: Yes, the dropbox here is mine (and updates automatically if the program finds something new).

@pedant: thanks for noticing. Here’s a (hopefully) working link: 275126.

@Wouter: thanks! we must be doing something a bit different from each other, because my admissible sequences were drifting somewhat (the one of length 275266 started at -118532, and by the time I’d gotten the length down to 275166 the starting point had drifted up to -116542), while your 275126 was very close to my 275266.

I’ve re-started the program beginning with your 275126 and have it down to 275090 now. Hopefully we can get mileage from taking turns like this!

Since it seems that we’re back to k0 = 34429 for the time being (see here), I’ve switched over to running on that, starting on Drew’s 387620 in comment #56. There’s a 387534 in the Dropbox now (link at comment #100).

I think one can take k0=26024 from the corrections to the improvements in the first half of Pintz (assuming the rest of it is Ok), and the noted expression (30) for kappa. I heard some chatter of improving kappa in any event.

@v08ltu: Thanks for keeping us up to date on k0 developments.

I’ve just kicked off a scan for narrow admissable sequences of length k0=26024. I should have some sequences to post in a few hours.

Here’s a starting point for k0=26024

http://math.mit.edu/~drew/admissable_26024_286224.txt

I’ll post a few more as I find them.

Some new records:

http://math.mit.edu/~drew/admissable_25111_274894.txt

http://math.mit.edu/~drew/admissable_26024_285810.txt

http://math.mit.edu/~drew/admissable_34429_387006.txt

These were all generated with a modified version of the randomized greedy algorithm that I think effectively incorporates a version of Wouter’s optimization. It generates multiple sequences in the same interval and then plays them off against each other). I also added a “contraction” step: whenever an admissable sequence is generated, it checks to see if either endpoint can be replaced by an interior point (and if so, does this). Previously I was only doing this check at the end, but interleaving contractions seems to work better.

@pendant, @xfxie, @wouter: I’ll be curious to see how easy it is for you to further optimize these (probably 26024 is the one to focus on, since this appears to be the current k0, and it is also the sequence that I expect is the least optimized). We all seem to be doing something slightly different, so it will be interesting to see if we can continue to profitably interleave our optimizations.

Found a solution 286216 for k0=26024, based on #106:

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_26024_286216.txt

@Drew: OK, I’m at work on the 26024 record. Found a 285798 very quickly, and am now at 285758; see the dropbox (post #100), which will be updated automatically as the code runs further.

@Drew,xfxie,Wouter: Conversely, I’d be interested to know how easily your algorithms can improve on the 34429 / 387380 that’s in the dropbox. It started from Drew’s 387620, was pared down to 387380 in a rapid series of improvements, and then was stuck there for most of the night.

It looks like each of the two parts of the deduction leading to have been confirmed, so we’re going to declare this value as “safe to use”. Sorry for all the confusion over the past 24 hours!

have been confirmed, so we’re going to declare this value as “safe to use”. Sorry for all the confusion over the past 24 hours!

@Terry: that’s good news, thanks!

Two new records:

http://math.mit.edu/~drew/admissable_34429_386814.txt

http://math.mit.edu/~drew/admissable_26024_285498.txt

I guess the later gives the current best bound on H of 285,530, using k0=26024 (but I’m sure this bound will be improved in short order).

For the benefit of those experimenting with various optimizations that work from a good starting point, here is a sequence for 26024 that is not as good as the record above, but uses a substantally different interval and is close enough to the current record that some optimization might push it over the top.

http://math.mit.edu/~drew/admissable_26024_285660.txt

@pedant: Starting from your 387380 sequence for k0=34429 I was able to work down to 387176, but this doesn’t really mean anything other than that there is a better sequence in roughly the same area.

The algorithm I am using now doesn’t really depend that much on the starting sequence (by default it just generates one in a given interval), it’s really just a matter of the interval, since after enough iterations its capable of completely changing the sequence. The sequences for 26024 listed above were both obtained starting with not very good sequences: the total diameter improvement from start to finish was well over 1000. In any case, here is the sequence I wound up with:

http://math.mit.edu/~drew/admissable_34429_387176.txt

I’m starting to converge to on an algorithm that I’m pretty happy with (at least until I think of something better!). If I have time this afternoon I’ll post the details of exactly what I am doing. I’ll eventually post C source code, but I want to wait until things stabilize before I take the time to clean it up and make it presentable.

Oops, I realize I actually had a better sequence for k0=34429 sitting in my directory:

http://math.mit.edu/~drew/admissable_34429_386750.txt

That’s it for k0=34429.

I am getting a little bit lost. I think it’s more important to concentrate on figuring out the best algorithm for creating narrow admissible sets and leave the hand fine-tuning for minute improvements when the other group has confirmed the best $k_0$ possible.

I’ve created table of (some of the) narrow admissible sequences here: https://docs.google.com/spreadsheet/ccc?key=0Ao3urQ79oleSdEZhRS00X1FLQjM3UlJTZFRqd19ySGc&usp=sharing (please ask me for editing rights to help me add more!). So far it seems that Sutherland leads in density with 10719_108540.

Which leads me to the question: “Is there any better measure than density?” I’ve noticed it’s easier to produce shorter sequences of higher density so maybe we should discount for that. It could be later used as a heuristic in search for “the optimal sequence”.

On another note, I’ve played with Fourier analysis a bit this morning. It seems that generally the diameter of an admissible sequence H grows as 10*length(H). However if one computes the first difference of H and feeds it to FFT the results can be pretty interesting. See https://github.com/vit-tucek/admissible_sets for examples on small sizes.

@Vít #112: I think the point here is just to start to understand the fine-tuning process; the interest does not lie in whatever records happen to be found during that experimentation. Indeed it may well be that the best way to find very good narrow admissible sets in practice will be to produce good narrow admissible sets in some structural way, and then to improve them by some perturbation method. In the absence of any new good structural ideas for creating narrow admissible sets from scratch, we’re thinking about the perturbation step, so that we’re ready to put that process to work when new values of $k_0$ are obtained from improvements to $\varpi$.

I just put the code online:

http://www.cs.cmu.edu/~xfxie/project/admissible/admissibleV1.0.zip

From my practices, it really needs some good luck to find better solutions using the current realization.

But it might be good starting point for realizing various meta-heuristics that might by more efficient.

BTW: the code is in JAVA.

@Vit: I agree 100% that this is all about developing the best algorithm. Having said that, the back and forth between Castryck, pedant, xfxie and myself over the past 24 hours has been extremely helpful in this regard — the realization that we could consistently improve each other’s sequences and being able to go and compare the specific details in the differences has led to substantially better algorithms. Let me take a shot at describing the algorithm I am now using — I’m sure the others can chime in with their own comments and/or additions.

Let me first give the broad strokes of the algorithm, I’ll fill in details of each step below. The essential components of the algorithm are a randomized version of the “greedy-greedy” algorithm that breaks ties at random, and the merging process suggested by Castryck in post 71.

Given k0, generate a dense admissable sequence of length k0 as follows:

1) Determine an easy target diameter D.

2) Determine a good interval I of width D.

3) Use the greedy algorithm to construct an admissable sequence H1 in I. Contract H1 (as explained below).

4) Repeat the following steps indefinitely:

(a) Use the randomized-greedy algorithm to construct an admissable sequence H2 in the interval [b0-delta,b1+delta], where

[b0,b1] is the smallest interval containing H1 and delta, is a small fraction (I use delta = 0.0025). Contract H2.

(b) if diam(H2) < diam(H1), replace H1 with H2 and go back to (a).

(c) Let S = H1 union H2. By construction, S will avoid odd numbers and 0 mod p for p sqrt(D) up to k0, breaking ties randomly. This will yield a new admissable sequence H3. Contract H3.

(d) If diam(H3) < diam(H2), replace H2 by H3, otherwise repeat b up to some max number of retries (I use 5-10). If you cannot reduce the diameter of H2, go back to step a. If you can, go to step b.

Now for some more details:

1) Determining the target diameter is easy to do empirically, e.g. run the greedy algorithm on increasingly large intervals centered about the origin until it succeeds. To first order, the diameter should be about k0*log(k0), see https://perswww.kuleuven.be/~u0040935/k0graph.png

2) To determine a good interval, I run the deterministic greedy algorithm (breaking ties upward, as suggested by Castryck) on the sequence of intervals [b0,b0+D] for b0 varying from -1.5*D up to 0.5*D, jumping by, say 0.001*D. This will generally suggest several good areas to look for dense admissable sequences.

3) To "contract" an admissable sequence means to check whether there is any interior point that can replace an end point, or whether there is a point past an end point that could replace the other end point and yield a narrower sequence. Most sequences produced by the greedy algorithm tend to be non-contractable, but it is still worth checking because many are. It's not necessary to contract at every stage (it takes time to check, so there is a cost), but I wrote it that way for simplicity.

4a) The parameter delta is critical. This "fudge factor" gives the algorithm enough room to easily construct H2, and moreover, it actually allows it to gradually move the target interval around, which is a good thing. It also helps to avoid the "saturation" that Castryck mentioned.

4c) Note that this is guaranteed to succeed, since S is already known to contain an admissable sequence (in fact two).

4d) The number of retries to allow is a performance trade-off. Too few and you might give up to early, too many and you waste time beating your head against the wall.

Now the algorithm above may sound somewhat involved, but it is actually quite easy to implement using the "greedy-greedy" code I posted earlier, it is very fast, and it is easy to parallelize (run as many simultaneous copies as you want). There are a few performance optimizations worth making, but for the sake of space I won't comment on them here. When I have time I will clean up my code and post a C implementation of the algorithm above.

As evidence of the efficacy of the algorithm, I ran it with the initial sequence H1 set to the diamter 108540 sequence for k0=107190 that you noted as the best in your table (by the way, the density should asympotically be about log(k0), so you really should weight by this when comparing different k0's). It then quickly found a diameter 108514 sequence in roughly the same area that I never could have found with the original deterministic greedy algorithm, and that would have taken ages to find with a blind randomized greedy algorithm. Here is the sequence:

http://math.mit.edu/~drew/admissable_10719_108514.txt

I see that step 4c got garbled when I cut and paste, it should read:

(c) Let S = H1 union H2. By construction, S will avoid odd numbers and 0 mod p for p up to sqrt(D). Now greedily sieve S of residue classes for each prime p > sqrt(D) up to k0, breaking ties randomly. This will yield a new admissable sequence H3. Contract H3.

To “greedily sieve” means pick a residue class that hits a minimal number of elements of S. When there is more than one choice, pick one at random (I currently do this uniformly, but I could argue for weighting it upward).

A modest improvement to the current record for k0=26024, produced by the algorithm described in #116. Diameter 285,458.

http://math.mit.edu/~drew/admissable_26024_285458.txt

Drew, do you mean [b0-delta*D,b1+delta*D] in 4(a)?

What I’ve been doing is very close to what Drew is doing, so let me just note the differences. I have no particular reason to think that these differences are improvements, but diversity for its own sake may help.

* I haven’t been doing the contraction step (but probably I should!).

* My implementation of greedy-randomized greedy is slightly different. After sieving out 0 mod p for p up to sqrt(D), I continue to sieve out the residue class 0 mod p for all subsequent primes (up to k_0, of course) for which 0 is a minimally-occupied residue class. I have no evidence that this is better or worse than just sieving up to sqrt(D). (To be honest for a while I’d forgotten that I was doing this — it’s an ‘option’ that I thought I had turned off — but I when I noticed I was still doing it, I left it in in the name of diversity.) The randomized greedy step then proceeds in the same way.

* Assuming that [b0-delta*D,b1+delta*D] was meant in 4(a) of Drew’s post, my delta is somewhat smaller than Drew’s, roughly 0.0014. As a result, the admissible set H2 sometimes has fewer than k_0 elements in it, so I have to repeat step 4(a) until I hit one that has at least k_0 elements in it.

* At the end of step 4(c), in lieu of the contraction step, I replace H3 (which may have more than k_0 elements in it) with the narrowest subset of H3 of size k_0, breaking ties randomly in case there are several equally narrow subsets. (This means that in the first pass through step 4(c), the set H2 comes from 4(a) and may have more than k_0 elements, but in subsequent passes it has exactly k_0 elements.)

* In step (d), I’ve been allowing more re-tries (100).

Oops, also meant to say, I’ve been letting delta vary in a small band (from 0.0013 to 0.0015) just to introduce another source of variation in the process.

@Drew, thanks a lot for this description, and for the 285458 sequence: I wouldn’t have guessed that there would be so much room for improvement!

I never wrote a fully automatized implementation of the algorithm; I just have a number of pieces in Magma (e.g. deterministic greedy-greedy, and something which resembles 4(a)(c)(d) in Drew’s above description) that I combined by hand. My implementations are too slow to keep up with the ones of Drew and pedant, anyway.

Some differences (none of which I believe are improvements):

* My delta was usually somewhat smaller (less than 0.001), and in fact my interval was not always chosen symmetrically around [b0,b1]. Sometimes I tried to pull things to the right or to the left by giving more freedom on one side. I don’t know if this helped (sometimes this resulted in a nice sequence, but that might have been a coincidence).

* I experimented a bit with given higher probabilty to certain residue classes. In fact, here the story is the same as in pedant’s case: I put it in at some point, and then forgot about it. I don’t have enough statistics to tell whether it helps or not, but my impression is that it doesn’t really matter.

@pedant: Yes, I meant [b0-delta*D,b1+delta*D] in 4(a), thanks. And even with my larger delta it happen that fewer than k0 elements are left after sieiving, in which case just retry. And whenever there are more than k0, I always pick the narrowest k0, as in the original greedy-greedy implementation. It may still happen that this admissable sequence is than contractable (the larger the input interval is relative to the diameter of the result, the more likely this is to be the case, as S gets smaller, H3 is less likely to be contractable).

Another interesting thing to look at is the difference between the size of S and k0. In step 4(a) with k0=20624 this will typically be around 500 or 1000, and then gradually dwindle down to around 20 or 30 as step 4(c) and 4(d) repeat. I originally used the parameter T=|S|-k0 as the guideline for when to restart in 4(a) (wait until T is below some small fraction of k0, say 0.1 percent), but this occasionally meant the algorithm would get stuck, so I put in the max retries parameter.

In simulated annealing terms, you can view T as the temperature of the system – it represents the amount of freedom the algorithm has in choosing an admissable subset of S. One way to describe this algorithm would be as iterated simulated-annealing with a greedy backbone (we are basically being greedy, the only randomness is in tie-breaking, but this already allows a lot of freedom).

@vit I love your FFT images, very cool! I’d be curious to see the same for these two sequences, also for k0=26024, but in significantly different intervals.

http://math.mit.edu/~drew/admissable_26024_285660.txt

http://math.mit.edu/~drew/admissable_26024_285774.txt

I’ve been using the number of obstructed primes for S as my “temperature” parameter, probably not so different in practice from what you’re doing. Actually here’s maybe a better way to go about it that occurs to me now (haven’t implemented this yet): keep track of the amount of information in the random choices being made, i.e. the quantity b := the sum over obstructed primes p for S of log(# of minimally occupied residue classes). If b gets stuck above a certain threshhold, allow yourself a certain maximum number of retries; when b falls below that threshold, just iterate (in a random order?) through all exp(b) possibilities until you either exhaust the possibilities or find a winning choice.

Comment from a newbie ignoramus – I hope not too irrelevant:

It seems that there is a lot of effort going into optimising the best interval for a given k0 in the current range, but I’m not seeing any talk about optimising the efficiency of algorithms to identify the provably best interval for a given (necessarily smaller) k0. In view of the rapidity with which improvements in omega and thus in k0 may plausibly occur over the next weeks or months, my impression is that there is a case for putting in a fair amount of work now into improving the efficiency of algorithms to derive the provably best interval for a given k0, so that the implications of improvements in omega can be quantified at once as soon as omega gets big enough. (All, of course, under the presumptions that we are already close to getting the best k0 for a given omega and that we will not see big departures from Zhang-esque overall proof structures.) Is such work happening, and, am I right that there is a case for it? If yes or no respectively, please elaborate :-)

@Andrew: I’ve uploaded the images to github. The source there also contains the code to produce them. (On windows I can recommend downloading winpython that gives you all the packages you need and Spyder IDE which is imho quite good. The plot command then pops up new “figure” window where you can zoom/pan/change scale to logarithmic etc.)

I’ve been thinking about better graphical representation of admissible sequences but I haven’t come up with anything so far. All the information is contained in the sifted residue classes… If only there was some sort of number theoretical version of FFT… :)

Thanks a lot for explaining your algorithms guys. I am unfortunately too busy to come up with some more code of my own.

@Aubrey: You have a fair point in my opinion. Although complexity of this problem is quite high. On the other hand, the best results so far (if I am not mistaken) regarding optimal sets set the record size around 1000 and are from around 2000 so there maybe some room for improvement. I was actually thinking about pinging the authors that have written on the topic to see whether they are still interested. But I am too busy at the moment.

@Vit: I’m not entirely sure what to make of the FFT plots, but they are definitely fun to look at, thanks.

@Aubrey: During the past week we haven’t had a single value of k0 that was stable for more than about 48 hours. But certainly once we are confident k0 has been optimized to the extent possible, lower bounds on H for that k0 become interesting. In fact for k0=34,429, which briefly looked stable, the lower bound H >= 234,322 was established (see the records page). This is almost certainly much lower than the true value, and far below the best upper bound H <= 386,750 we have (see post 112). I'm, sure someone will crank through the numbers for k0=26,024 if it stands up for more than a few days. In terms of getting tight bounds on H, as Vit indicated, this is probably hopeless for k0=26,024, but if k0 gets small enough it might become feasible.

Some benchmarks for k0=26,064:

Zhang (m=655): 303,558

Hensley-Richards (m=723): 297,454

Asymmetric Hensley-Richards (m=723, i=13542) 297,076

The current best using the algorithm in #114 is 285,456.

http://math.mit.edu/~drew/admissable_26024_285456.txt

After letting the algorithm run over night, the diameter is down to 285,436.

http://math.mit.edu/~drew/admissible_26024_285436.txt

Just injected some new components into the algorithm for tackling with the problem structure, and the performance become better.

The solution 285446 is built on #128 :

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_26024_285446.txt

The solution 285432 is built on #129:

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_26024_285432.txt

The basic trick is to build a core set and allow plateau moves:

Just generated more solutions:

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_26024_285420.txt

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_26024_285418.txt

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_26024_285406.txt

@xfxie: excellent. Here’s a similar but slightly better sequence, can you improve this one?

http://math.mit.edu/~drew/admissible_26024_285430.txt

Sorry, here is a better one to work from:

http://math.mit.edu/~drew/admissible_26024_285378.txt

@andrew: Just got #133 as well, and then the algorithm stuck in the plateau for many rounds. The algorithm might need some perturbation operations.

Maybe pedent’s algorithm can help escaping from the local minimum.

Just a new one:

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_26024_285328.txt

Another one (285324):

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_26024_285324.txt

@xfxie: I agree, some perturbations definitely appear to help, I’m now making progress again, down to

http://math.mit.edu/~drew/admissible_26024_285326.txt

Or even 285318:

http://math.mit.edu/~drew/admissible_26024_285318.txt

285314, seems there are so many plateau levels:

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_26024_285314.txt

Stuck at 285304:

http://www.cs.cmu.edu/~xfxie/project/admissible/admissible_26024_285304.txt

Still making progress…

http://math.mit.edu/~drew/admissible_26024_285288.txt

@xfxie 131: I agree on the plateau moves. In terms of the description in #116, I changed the algorithm to replace H2 with H1 in 4(b) even when the diameters are equal. This happens quite often, and usually H2 and H1 will still have the same endpoints, but *different* interior points. After letting several threads run for a few minutes, I found that they were all using different H1’s of the same diameter in the same interval. This gives the algorithm more freedom to find new solutions.

I’m now down to 285,278:

http://math.mit.edu/~drew/admissible_26024_285278.txt

I don’t have time to implement this right now as I have plans for the rest of the morning, but here’s a remark about how one might try to make these improvements in a systematic way, rather than using randomness. Hopefully one of the others will be able to make this go.

If S is an admissible set, I’ll say that c an unobstructed residue class mod p if S contains no elements that are congruence to c (mod p); otherwise say that c is obstructed. I’ll say that c is a minimally obstructed residue class mod p if c is obstructed, and the number of elements in S that are congruent to c (mod p) is minimal among obstructed classes.

Start from an admissible set S, let I be the interval [Min(S)..Max(S)], and look for a pair of primes (p,q) with the following properties.

— there exists a minimally obstructed class c for p, and a minimally obstructed class d for q, such that T1 := { x in S : (x mod p) eq c } and T2 := {(x in S : (x mod q) eq d } have nontrivial intersection. Let the sizes these sets be C, D respectively.

— there exist unobstructed classes c’ for p and d’ for q such that there are at least C elements in T3 := {x in I : (x mod p) eq c’ } such that adjoining them to S would only obstruct p, and at least D elements in T4 := { x in I: (x mod p) eq d’ } such that adjoining them to S would only obstruct q.

Then one can alter S by deleting T1, T2, and either endpoint of S, and adjoining T3, T4 to obtain a narrower admissible set with the same number of elements.

For instance, one can get from S := Drew’s 285458 (post #118) to his 285456 (post #128) in this manner. Take p = 2543 and q = 3683. The class c = 716 is minimally obstructed for 2543, and 1998 is minimally obstructed for 3863; we have T1 = {-34886,-50144,102436} and T2 = {102436}, with nontrivial intersection. We’re forced to take c’ = 2270 (it’s the only unobstructed prime for p in S), but fortunately T3 = {-70036,-53758,92744} has size 3. There are multiple choices choices for T4; the one he happens to use is d’ = 3829, T4 = {38596}.

(This does not produce exactly the set of diameter 285456 that Drew has, as there are also various sideways moves, e.g. 110756 is removed and 29258 is added, affecting only which classes mod 4513 are obstructed. It’s conceivable to me that this has some of the elements of what xfxie means by moving around on a plateau? That terminology is too vague to know for sure just from the choice of words.)

One can of course imagine improvements where you allow yourself to add classes that are just beyond one endpoint of I so long as you’re not going so far as the distance from the other endpoint of I to the first number in the interior.

@pedant: I can implement this as a generalization of the contract step in #116. Another optimization I have added is to try shiting the set by successively removing points from one end and adding them to the other (in a way that preserves admissibility). One occasionally gets a reduction in diameter this way.

I’ve started a new wiki page at

http://michaelnielsen.org/polymath1/index.php?title=Finding_narrow_admissible_tuples

to describe all the known sieves for finding good tuples, as well as lower bounds, and also a more systematic table for the benchmarks. I didn’t describe the most recent sieves (greedy-greedy and beyond); if someone who is more familiar with the more recent methods could give some descriptions there (and maybe also links to code etc.) that would be great!

@Tao,Sutherland: Is 26,064 in table a typo? (expecting 26,024)

Oops, that was a typo and has been corrected (on the wiki at least), thanks. (I’m assuming it was a typo in #128 as well.)

@Tao: I can take a shot at this, I was planning to latex up a more coherent version of post #116 in any case, but it may be tomorrow before I can get to it. And yes 26,064 in #128 was a typo, it should have been 26,024.

I was also going to suggest recording the best upper bounds we have for some of the older/provisional bounds of k0 in the records table (some of these didn’t make it in during the confusion when k0 was unstable or were posted after they were obsolete). If k0 happens to change in the future these would be good to have (e.g. the best upper bound 386,750 for the previous k0=34,429 (post #112) isn’t listed, but this could become relevant if for some reason k0=26,024 came into question). I can put these in to the table if you want, just let me know.